One of the companies we love is FullStory. Recently, they wrote a nice piece about how when people buy a product, they’re really hiring that product to do a job — a job they already needed to do but that is easier with the assistance of the product.

This is true for data stories, too. In a nutshell, data stories are the assembly of data, visuals, and text into a visual narrative about the meaning of the data. Properly crafting an effective data story — one that connects the reader to their data, its meaning, and how it relates to their environment, all while assisting the reader in accomplishing a meaningful task — is not an easy endeavor in which to succeed.

But don’t despair! Give your data story these 3 jobs to do and your readers will be more effective with their data.

Job #1: Tell them something they already know.

When you write a data story, the very first thing you have to do is build trust with your reader. Until they have confidence in your story, the best you can hope for is to drag them into the slog of figuring whether or not they can trust your story, which is typically performed through in-depth and independent data forensics. Did somebody say “Party!”? Um, no.

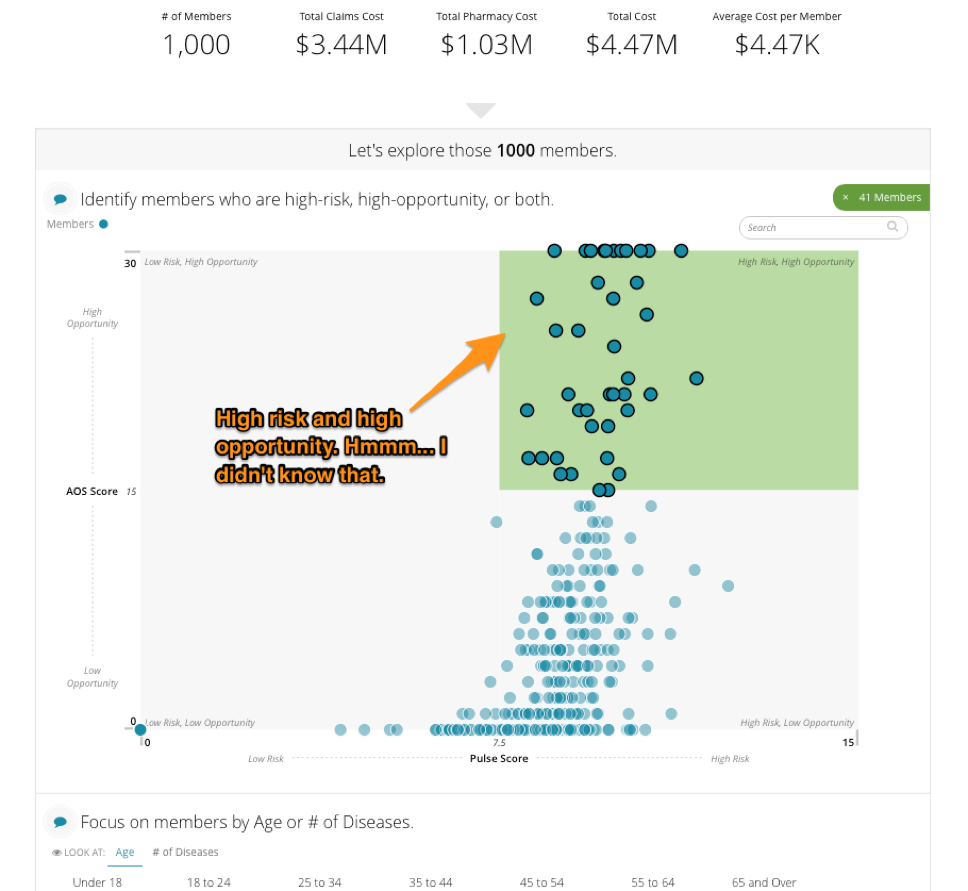

So, how do you build trust? By meeting them on common ground: tell them something that they already know and agree with. Here’s an example from an application we created using Juicebox, our data reporting application platform, that addresses the greatest opportunities for cost and care management in the world of population health.

We start by presenting a key metric of total number of members, a metric that most users would be familiar with and would give them the sense that we’re both talking about the same thing. Now we’re on the same page with the reader and, presuming we’ve done it correctly, the data story is ready to do its next job.

Job #2: Tell them something they don't already know.

A data story that only tells the reader what they already know isn’t terribly useful. So the second job of the data story is to make them smarter and introduce them to something new. This new piece of information demonstrates the value of your data story. If done properly, the reader comes away saying “A-ha! I see it!”

Continuing with our population health example from above, we introduce the bucketing of population members into a high-risk/high-opportunity group. “Oh look, there are 41 people in that group that are at risk, but who have a high opportunity for change."

But, as GI-Joe always says, “knowing is half the battle.” The other half? On to your data story’s third and final job.

Job #3: Give them something to do.

If data is presented and no-one acts, did it matter? If a tree falls in the forest and no-one hears it, did it make a sound? If the rubber doesn’t meet the road, is the cliché reality? Seriously though, when the new thing that the audience learned inspires actions, that’s when it become truly useful. Continuing with our example, you can see that the user is presented with a list of specific people who fall into the high-risk, high-opportunity bucket — perhaps feeding these folks into a campaign to actively manage their risk would be the next step.

The more specific you can get with the recommendation, the better. This last step is most successful when your data story is written around a very specific and targeted narrative. This is what we at Juice call a short story... but more on that another day.

The next time you write a data story, give it these three jobs and we’re certain you’ll make your readers more effective at using your data. Need some more help with your data story? Send us a message at info@juiceanalytics.com or fill out the form below!