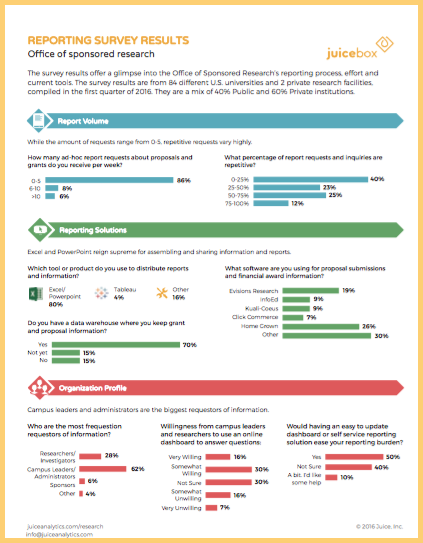

We made it our goal this summer to hear back from prospective users of our research application about how they would use the app to address various hypothetical issues in their day-to-day workflow. After asking a couple thousand departmental leaders to put themselves in situations that would lead them to use our app to address a need, we presented them with three different scenarios, ranging from grant proposal preparation to tenure decisions. We got some very interesting responses that we believe are applicable to how people use all different types of data products and reporting solutions. Here are our findings.

Benchmarks and Discussions - Specific to the research app, we found that when department heads go to write a grant proposal, they prefer to communicate with peers and use their peers' previously successful grant proposals as a benchmark of the quality that a particular sponsor expects from a proposal.

Similarly, users of our Healthcare app also connect with their coworkers about training assessment and work performance. They too use their peers' experiences and expertise as a barometer for their own performance in training and in their work. Our chat feature that's built into Juicebox applications does a great job of facilitating discussions right in the app, so you can highlight metrics, share them, and start a conversation

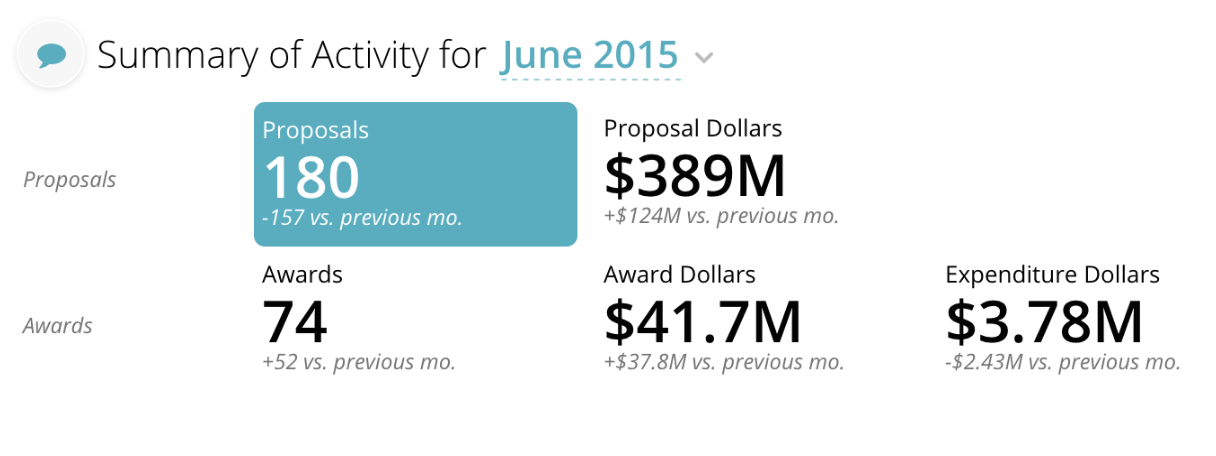

Our chat feature in action

Performance Measurement - Specific to the research app, we found that department heads take their faculty's research activity very seriously. In fact, they consider a faculty member's research activity to have a greater influence on their promotion and tenure decision than teaching evaluations, service, and the opinions of other faculty members in their department.

At Juice, we are no stranger to performance metrics. Managers in all types of industries use our apps to measure the performance of their employees for promotion decisions and general review purposes. We take measuring performance to the next level by giving our users seemingly unlimited ways to filter the data.

An example of research performance measurement

By listening to the needs and preferences of our users, we've created our apps to enable users to analyze peer performance within their institution and communicate with each other seamlessly. This takes the guesswork out of with whom to consult and what to seek from those data-enabled conversations. To get a taste of how you can get rich insights out of Juicebox, check out a quick demonstration of our research application or schedule a demo.